Neural USD: An object-centric framework for iterative editing and control

Overview

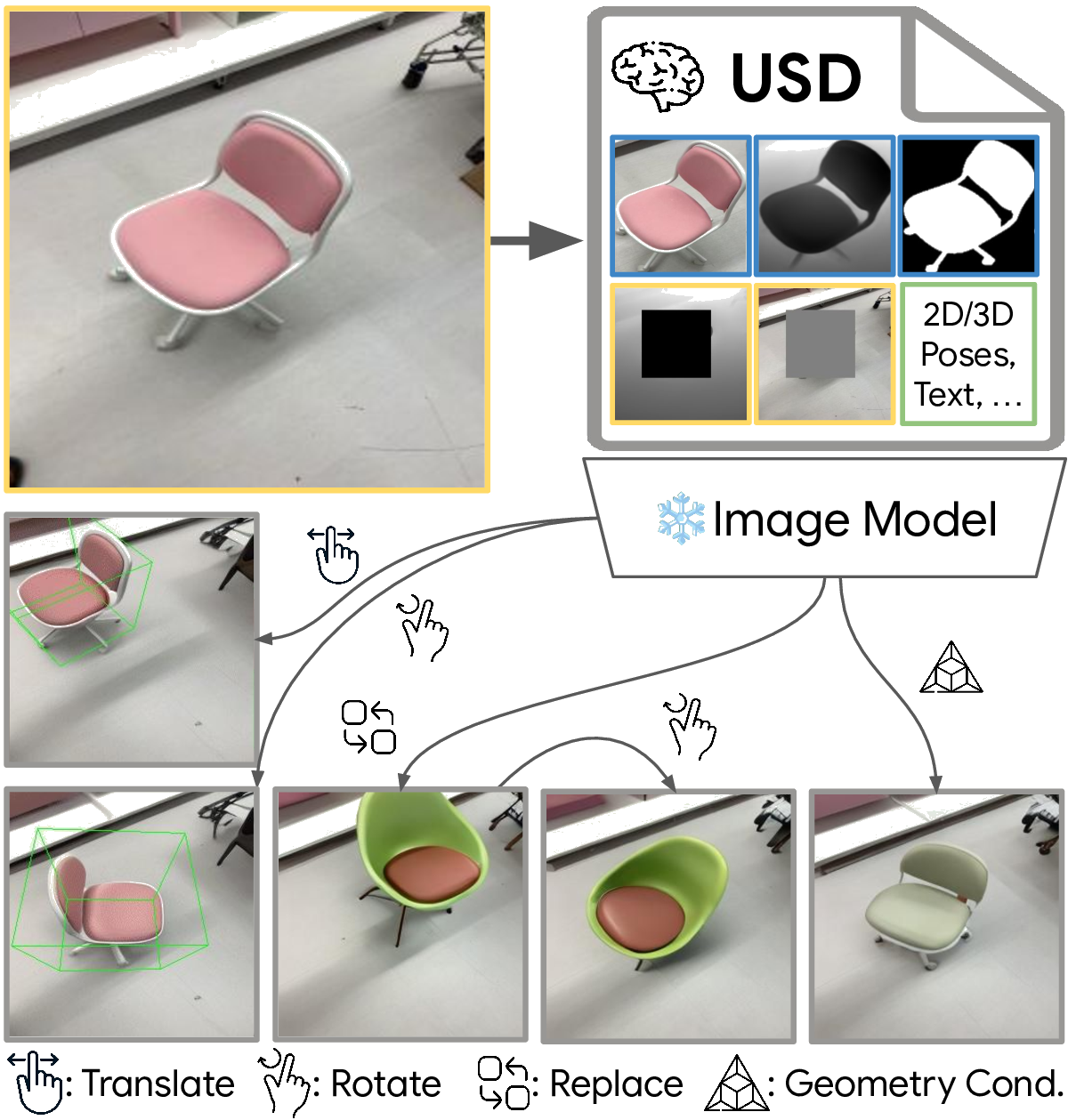

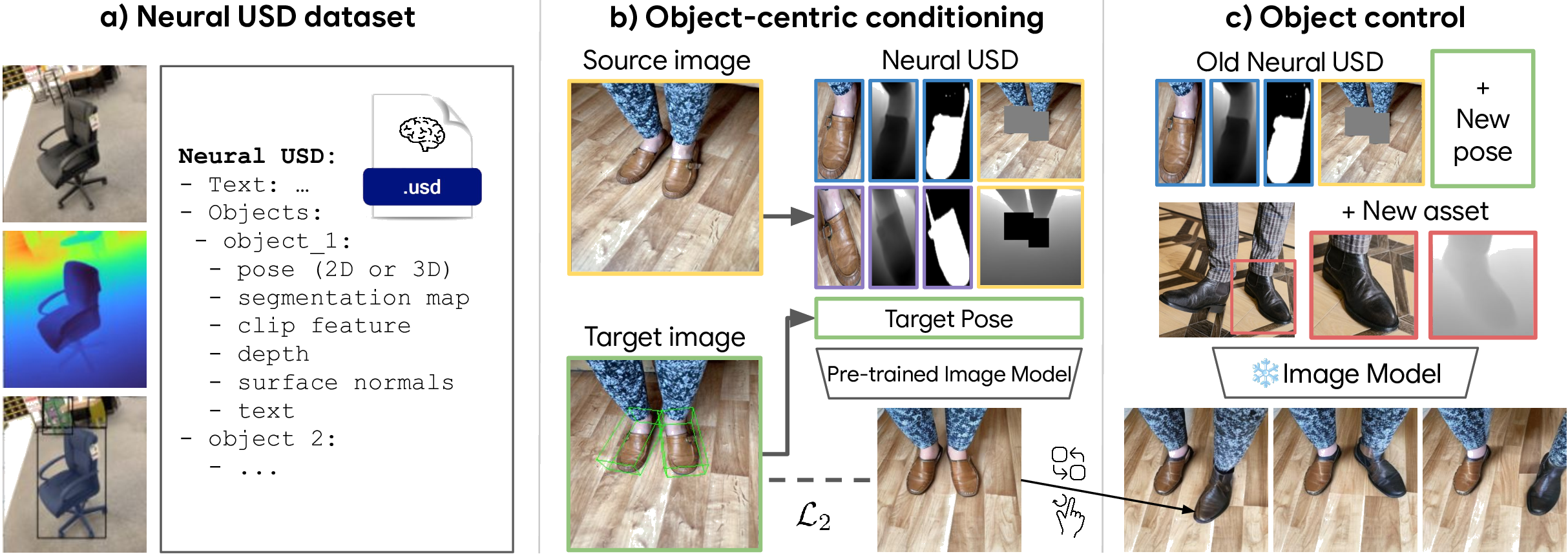

Amazing progress has been made in controllable generative modeling, especially over the last few years. However, some challenges remain. One of them is precise and iterative object editing. In many of the current methods, trying to edit the generated image (for example, changing the color of a particular object in the scene or changing the background while keeping other elements unchanged) by changing the conditioning signals often leads to unintended global changes in the scene. In this work, we take the first steps to address the above challenges. Taking inspiration from the Universal Scene Descriptor (USD) standard developed in the computer graphics community, we introduce the “Neural Universal Scene Descriptor” or Neural USD. In this framework, we represent scenes and objects in a structured, hierarchical manner. This accommodates diverse signals, minimizes model-specific constraints, and enables per-object control over appearance, geometry, and pose. We further apply a fine-tuning approach which ensures that the above control signals are disentangled from one another. We evaluate several design considerations for our framework, demonstrating how Neural USD enables iterative and incremental workflows.

Method

A Neural USD consists of assets with multiple modalities: appearance, geometry, and pose. Pre-trained image models fine-tune on Neural USD data, encoding appearance and geometry from a source image and pose from a target image to reconstruct the target. At inference time, objects’ poses, geometry, and appearance can be modified, including the background:

Control Examples

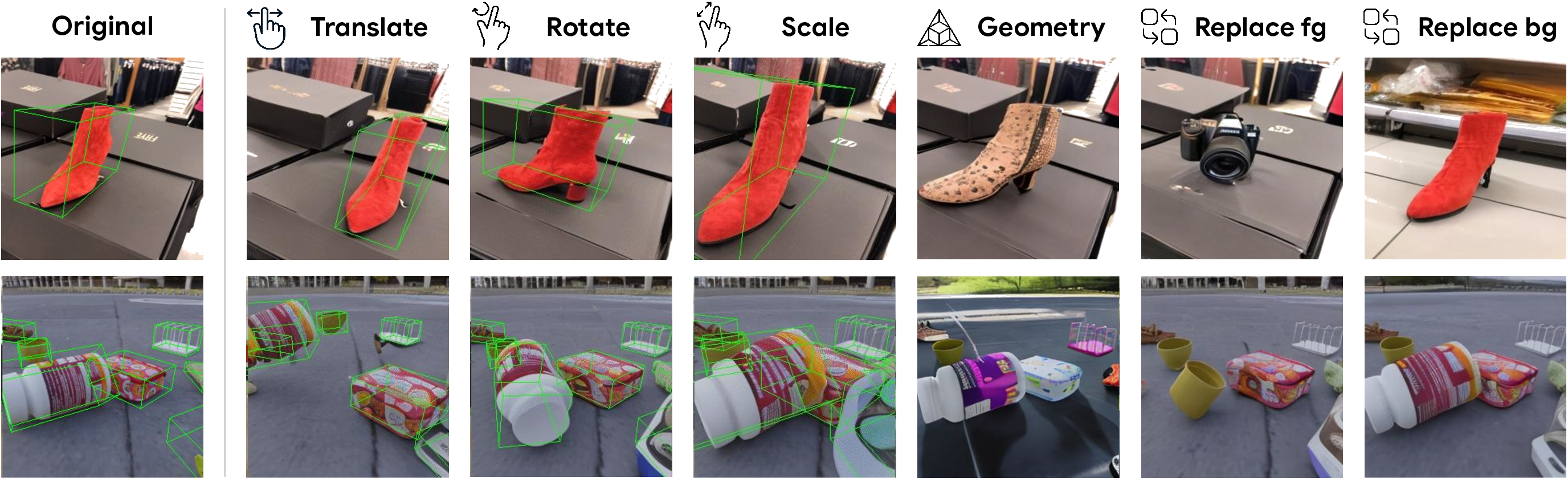

Neural USD allows users to perform a variety of pose, appearance, and geometry modifications to both the foreground and the background objects:

Object Replacement

Object replacement examples with appearance and geometry conditioning (top) and geometry conditioning (bottom):

Neural USD Examples

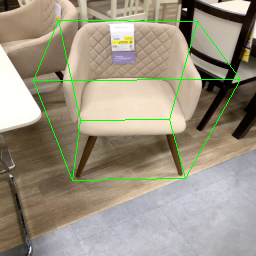

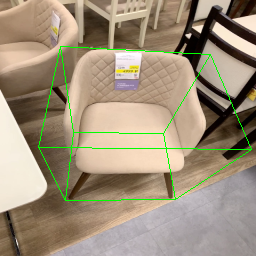

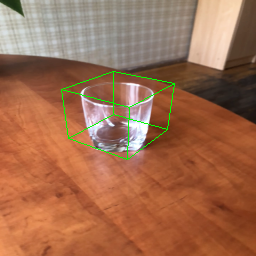

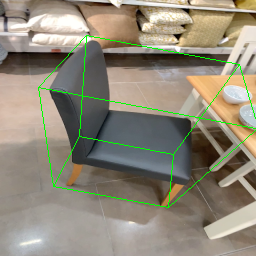

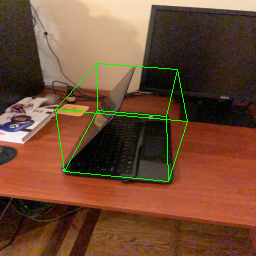

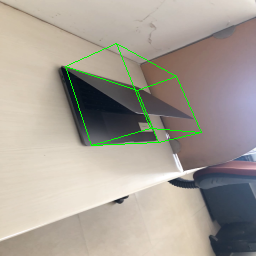

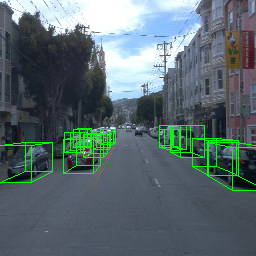

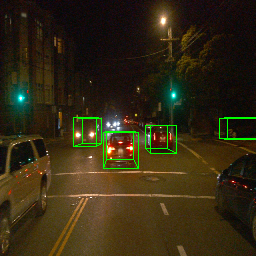

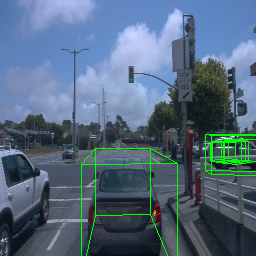

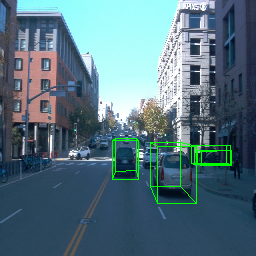

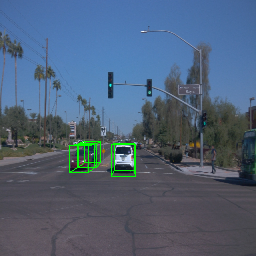

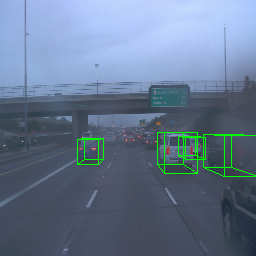

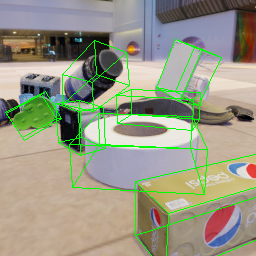

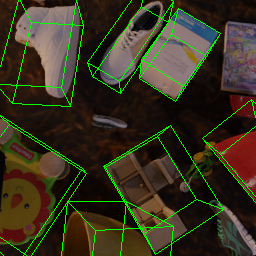

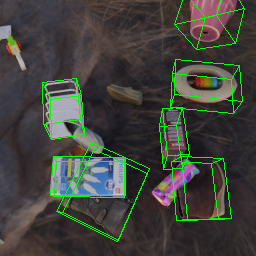

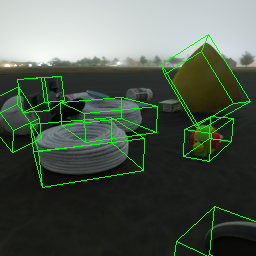

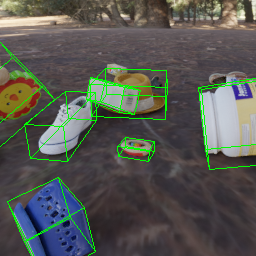

Below we demonstrate some of the interactive operations made possible by using Neural USD. We showcase examples on four well-known datasets: Objectron, Waymo, MOVI-E, and Ego4D Egotracks.

Objectron

Waymo

MOVI-E

Egotracks

How to cite

-

@article{Escontrela25arXiv_NeuralUSD, journal = {CoRR}, author = {Escontrela, Alejandro and Kushagra, Shrinu and Steenkiste, Sjoerd van and Rubanova, Yulia and Holynski, Aleksander and Allen, Kelsey and Murphy, Kevin and Kipf, Thomas}, keywords = {Computer Vision and Pattern Recognition (cs.CV)}, title = {Neural USD: Scalable Scene Editing via Differentiable Universal Scene Description}, publisher = {arXiv}, copyright = {Creative Commons Attribution 4.0 International}, year = {2025}, eprint = {2510.23956}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, }